The Physics of Language Models

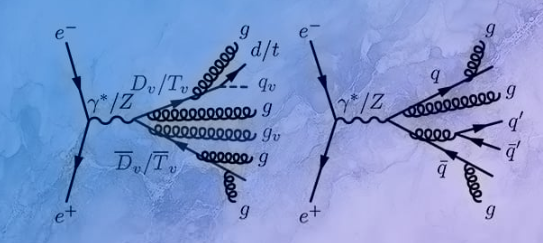

And What We Actually Know About Knowledge

And What We Actually Know About Knowledge

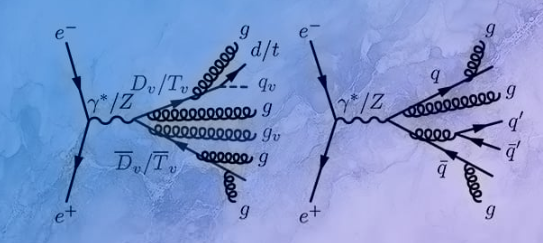

TL;DR: Yoshua Bengio’s 2003 paper “A Neural Probabilistic Language Model” is the Genesis of modern NLP. Before this paper, language models were statistical counting machines. After it, they became ...

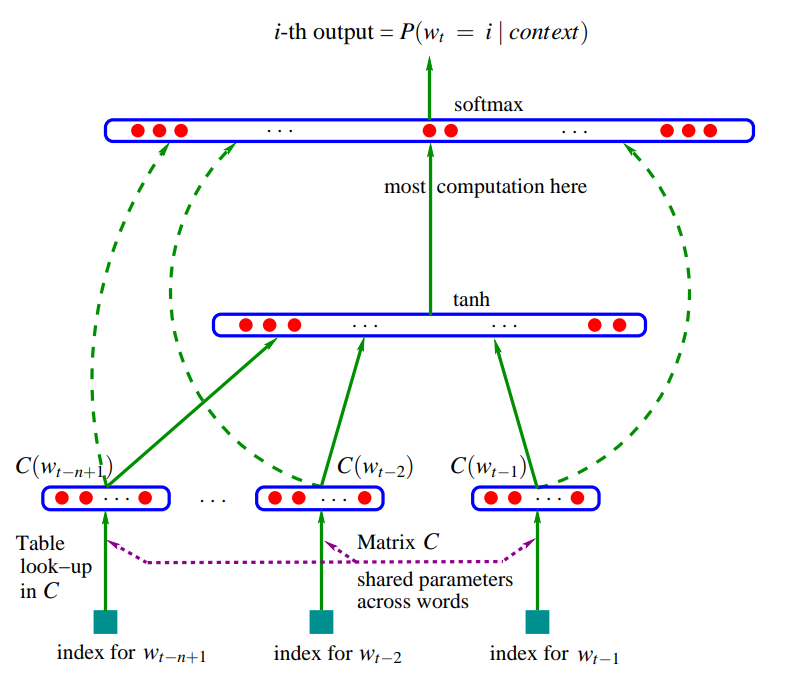

Review of all the books I read in 2024.

Talk Trois Log

Review of all the books I read in 2023.

TL;DR: Before normalization, training deep networks was like trying to stack cards in a hurricane—one small change would topple everything. BatchNorm (2015) changed the game by stabilizing training...

TL;DR: Dropout started as a simple trick to prevent overfitting—randomly turn off neurons during training. But it evolved into something profound: a gateway to understanding uncertainty in deep lea...

TL;DR: Every time you train a neural network, you’re solving an optimization problem in a space with millions of dimensions. This is the story of how we went from basic SGD taking baby steps to Ada...

Review of all the books I read in 2022.

Detroit; Become Human is a 2018 thrd-person game, built around three "deviant" androids...